Google’s crawler, commonly known as Googlebot, sees and interprets web pages differently from how a human user does. It primarily processes the underlying HTML code of a page rather than the visual layout.

Here’s a detailed look at how Googlebot views and processes web pages:

1. Fetching the Page

- Initial Request: Googlebot makes an HTTP request to the web server hosting the page. This is similar to what happens when a user accesses a webpage through a browser.

- Response: The server responds with the HTML content of the page, along with any associated resources such as CSS, JavaScript, images, and other media files.

2. Rendering the Page

- Rendering Engine: Googlebot uses a web rendering service (WRS) to process the HTML, CSS, and JavaScript. This service emulates a browser environment to render the page.

- Executing JavaScript: Googlebot executes JavaScript on the page to ensure that dynamically generated content is also fetched and processed. This is crucial for modern websites that heavily rely on JavaScript frameworks for content delivery.

3. Parsing the Content

- HTML Parsing: Googlebot parses the HTML to extract the main content of the page, including text, meta tags, headers, links, and structured data.

- CSS and Layout: While Googlebot doesn’t focus on the visual presentation, it does parse CSS to understand the layout and visibility of elements. This helps determine which content is visible to users.

- Handling JavaScript: For JavaScript-heavy pages, Googlebot executes the scripts to ensure it captures all the content and navigation paths that users would see.

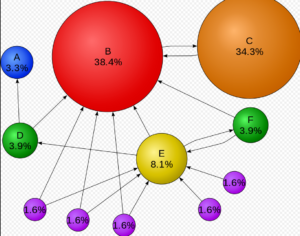

4. Crawling Links

- Internal and External Links: Googlebot follows internal links (links within the same website) and external links (links to other websites) found on the page. This helps it discover new content and pages to crawl.

- Rel Attributes: Attributes like

rel="nofollow"signal to Googlebot not to follow certain links, affecting how link equity is distributed.

5. Reading Meta Tags and Directives

- Meta Robots Tag: Googlebot reads meta tags in the HTML

<head>section for instructions on how to handle the page. Common directives includenoindex(do not index this page),nofollow(do not follow links on this page), andnoarchive(do not cache a copy of this page). - X-Robots-Tag: Similar directives can be given in HTTP headers using the X-Robots-Tag, providing control over how specific types of resources are handled.

6. Handling Structured Data

- Schema.org Markup: Googlebot recognizes structured data markup such as JSON-LD, Microdata, and RDFa. This helps it understand the content’s context and enhances search result features like rich snippets.

- Rich Results: Pages with structured data can be eligible for enhanced search results, which can improve visibility and click-through rates.

7. Considering Robots.txt

- Access Restrictions: Before fetching a page, Googlebot checks the

robots.txtfile of the website. This file provides rules on which parts of the site should not be crawled. If a page or directory is disallowed inrobots.txt, Googlebot will not crawl it. - Crawl-Delay and Sitemap: The

robots.txtfile can also specify crawl-delay directives and point to the location of XML sitemaps.

8. Processing the Page Content

- Indexing: After fetching and rendering the page, Googlebot sends the content to Google’s indexing system. The page is then analyzed for relevance to various search queries.

- Content Analysis: Googlebot evaluates the page’s content, keywords, and overall context to understand what the page is about and how it should be ranked in search results.

9. Handling Dynamic and Interactive Content

- Single Page Applications (SPAs): For SPAs, Googlebot executes the necessary JavaScript to load different views and states of the application. This ensures all relevant content is indexed.

- Lazy Loading: Googlebot is capable of handling lazy-loaded content, ensuring that images and other resources loaded dynamically are also indexed.

10. Mobile-First Indexing

- Mobile Rendering: With mobile-first indexing, Googlebot primarily uses the mobile version of the content for indexing and ranking. This means it crawls and renders the page as a mobile user would see it.

- Responsive Design: Sites optimized for mobile devices generally perform better in mobile-first indexing due to their responsive design and faster loading times.

Conclusion

Let us see how google bot works:

- The first thing googlebot sees in page is <!DOCTYPE> declaration which tells google bot about version of HTML

- Next it will see the html tag in the page it might also have language attribute. This helps Googlebot to understand the content and provide relevant results

- After that googlebot will look at head tag which contains title which is not shown to users and then meta description tag which defines short summary of the page that may appear in the search results.

- The <head> tag may also contain links to external resources, such as stylesheets, scripts, icons, and fonts, that affect how the page looks and behaves

- The <body> tag may have various elements that structure and format the content, such as headings (<h1>, <h2>, etc.), paragraphs (<p>), lists (<ul>, <ol>, etc.), tables (<table>), images (<img>), links (<a>), forms (<form>), and more.

Googlebot sees pages primarily through the HTML and rendered content, including JavaScript and CSS, to ensure it captures all visible and important information. By understanding how Googlebot views and processes pages, webmasters can optimize their sites to ensure that all valuable content is accessible and properly indexed, enhancing their visibility in search engine results. This involves creating clean HTML, using proper meta tags, ensuring JavaScript is crawlable, and providing structured data to improve the understanding and presentation of content in search results.